Disentanglement-Based Multi-Vehicle Detection and Tracking for Gate-Free Parking Lot Management

Abstract

Multiple object tracking (MOT) techniques can help to build gate-free parking lot management systems purely under vision-based surveillance. However, conventional MOT methods tend to suffer long-term occlusion and cause ID switch problems, making applying them directly in crowded and complex parking lot scenes challenging. Hence, we present a novel disentanglement-based architecture for multi-object detection and tracking to relieve the ID switch issues. First, a background image is disentangled from the original input frame; then, MOT is applied separately to the background and original frames. Next, we design a fusion strategy that can solve the ID switch problem by keeping track of the occluded vehicles while considering complex interactions among vehicles. In addition, we provide a dataset with annotations in severe occlusions parking lot scenes that suits the application. The experiment results show our superiority over the state-of-the-art trackers quantitatively and qualitatively.

Contributions

- We propose a system procedure that ensures stable tracking results even under severe occlusion in a parking lot convention.

- We present a long-term parking lot surveillance video dataset with labels in the YOLO format. For furthermore reference, the link for the system code and the datasets will be provided after acceptance.

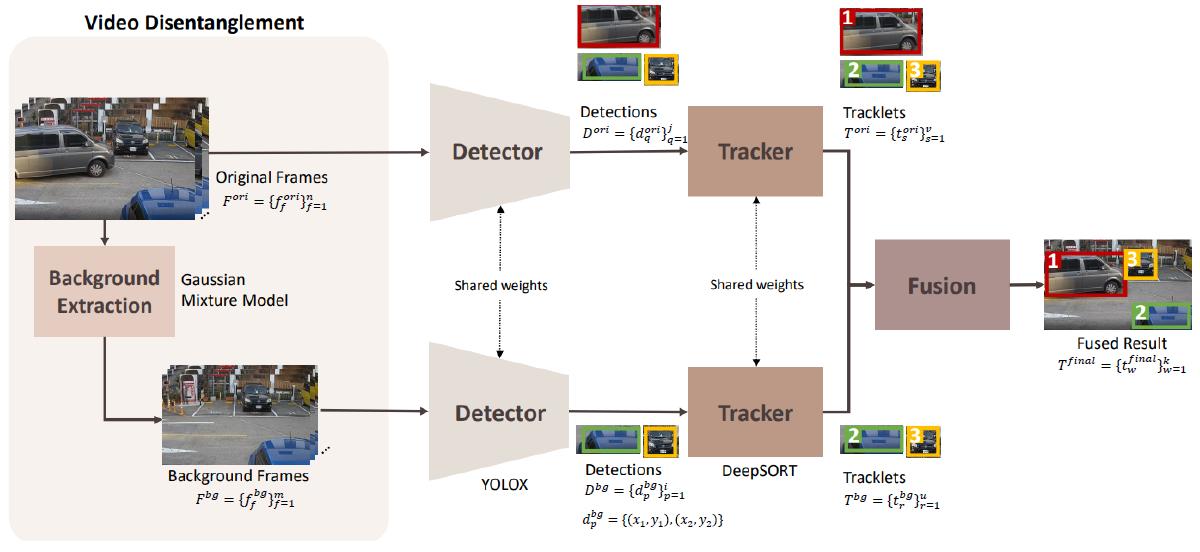

Proposed Method

Input video frames are first disentangled using the background subtraction technique to acquire the background frame for the corresponding original video frame. After objects are detected and associated with previous tracklets in both the original and the background frames, we then fuse the tracklets and present the final tracking results.

Experimental Results

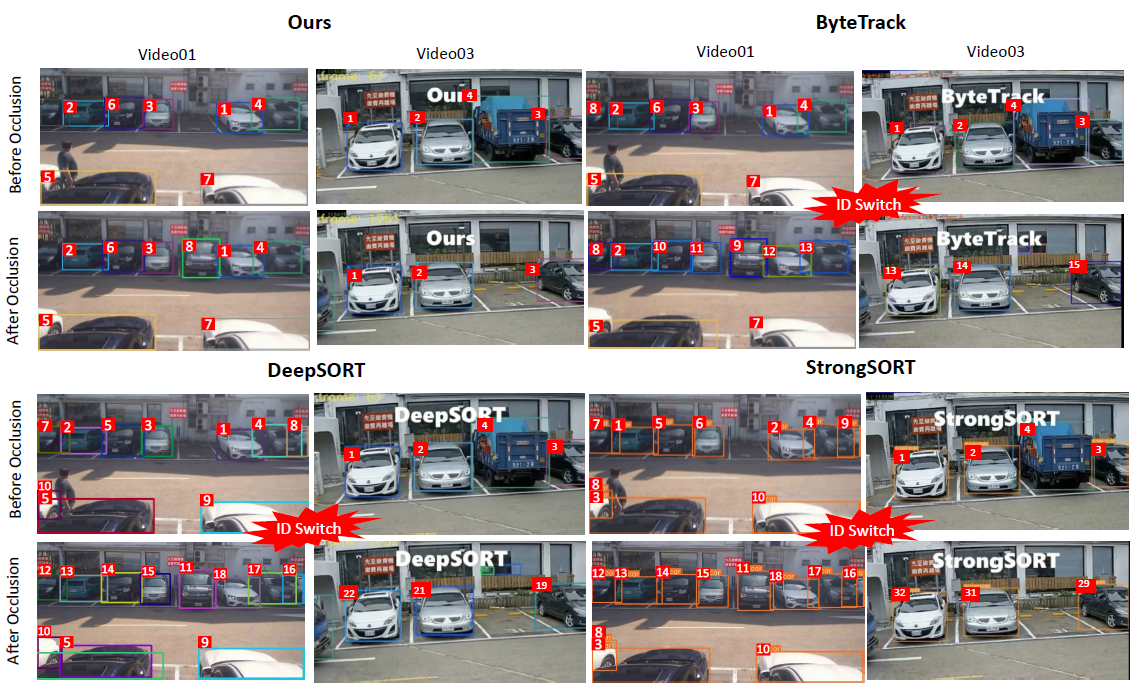

Comparison of our method with DeepSORT[1], ByteTrack[2], and StrongSORT[3] on the 01 video in Altob&ACM dataset (i.e., Video 1 and 3). We show the car tracking IDs (the white numbers) before and after long-term occlusion. Our method robustly tracks cars with consistent IDs, while the other methods produce severe ID switch results. Due to limited space, the complete tracking and comparison results of all testing videos are shown in our supplementary video [4].

| Methods |

01_video |

| HOTA↑ |

MOTA↑ |

IDF1↑ |

DetA↑ |

AssA↑ |

FN↓ |

FP↓ |

IDSW↓ |

Frag↓ |

| DeepSORT |

84.0 |

82.2 |

83.7 |

75.9 |

75.0 |

972 |

667 |

6 |

20 |

| ByteTrack |

73.7 |

66.6 |

71.7 |

67.5 |

69.9 |

683 |

2395 |

20 |

11 |

| StrongSORT |

78.9 |

71.1 |

78.6 |

72.5 |

79.1 |

710 |

1965 |

7 |

15 |

| Ours |

91.6 |

84.0 |

91.8 |

79.8 |

93.8 |

935 |

543 |

0 |

2 |

| Methods |

02_video |

| HOTA↑ |

MOTA↑ |

IDF1↑ |

DetA↑ |

AssA↑ |

FN↓ |

FP↓ |

IDSW↓ |

Frag↓ |

| DeepSORT |

95.8 |

97.7 |

94.8 |

90.7 |

90.3 |

244 |

79 |

9 |

26 |

| ByteTrack |

99.8 |

99.2 |

99.4 |

93.5 |

96.5 |

55 |

50 |

4 |

14 |

| StrongSORT |

98.7 |

99.1 |

98.7 |

94.5 |

95.1 |

99 |

22 |

4 |

16 |

| Ours |

99.8 |

99.6 |

99.7 |

92.7 |

98.2 |

26 |

17 |

2 |

5 |

| Methods |

03_video |

| HOTA↑ |

MOTA↑ |

IDF1↑ |

DetA↑ |

AssA↑ |

FN↓ |

FP↓ |

IDSW↓ |

Frag↓ |

| DeepSORT |

79.0 |

78.6 |

73.0 |

75.6 |

68.3 |

1648 |

1286 |

6 |

26 |

| ByteTrack |

79.1 |

75.9 |

70.5 |

74.9 |

68.0 |

1415 |

1888 |

12 |

24 |

| StrongSORT |

77.2 |

83.7 |

71.4 |

80.5 |

67.7 |

1531 |

668 |

46 |

71 |

| Ours |

98.8 |

97.9 |

98.4 |

91.8 |

96.8 |

82 |

204 |

2 |

7 |

| Methods |

04_video |

| HOTA↑ |

MOTA↑ |

IDF1↑ |

DetA↑ |

AssA↑ |

FN↓ |

FP↓ |

IDSW↓ |

Frag↓ |

| DeepSORT |

80.6 |

71.1 |

79.6 |

73.3 |

81.8 |

355 |

840 |

1 |

3 |

| ByteTrack |

78.6 |

67.0 |

77.6 |

71.4 |

79.0 |

294 |

1069 |

2 |

3 |

| StrongSORT |

65.7 |

56.7 |

67.6 |

60.2 |

66.4 |

1218 |

568 |

7 |

20 |

| Ours |

89.2 |

74.6 |

88.7 |

77.1 |

97.4 |

3 |

1048 |

0 |

0 |

Quantitative comparison with the state-of-the-art MOT methods in terms of different metrics. Here, the notation (↑) indicates the higher is better. In contrast, the notation (↑) means the lower is better.

Reference

- Wojke, Nicolai and Bewley, Alex and Paulus, Dietrich “Simple online and realtime tracking with a deep association metric” 2017 IEEE international conference on image processing (ICIP), p.3645–3649, 2017

- Zhang, Yifu and Sun, Peize and Jiang, Yi and Yu, Dongdong and Yuan, Zehuan and Luo, Ping and Liu,Wenyu andWang, Xinggang “ByteTrack: Multi-Object Tracking by Associating Every Detection Box” arXiv preprint arXiv:2110.06864, 2021

- Du, Yunhao and Song, Yang and Yang, Bo and Zhao, Yanyun “Strongsort: Make deepsort great again” arXiv preprint arXiv:2202.13514, 2022

- https://www.youtube.com/watch?v=XpDgZn-BYmE